Abstract

Estimating the transferability of publicly available pre-trained models to a target task has assumed an important place for transfer learning tasks in recent years. Existing efforts propose metrics that allow a user to choose one model from a pool of pre-trained models without having to fine-tune each model individually and identify one explicitly. With the growth in the number of available pre-trained models and the popularity of model ensembles, it also becomes essential to study the transferability of multiple-source models for a given target task. The few existing efforts study transferability in such multi-source ensemble settings using just the outputs of the classification layer and neglect possible domain or task mismatch. Moreover, they overlook the most important factor while selecting the source models, viz., the cohesiveness factor between them, which can impact the performance and confidence in the prediction of the ensemble. To address these gaps, we propose a novel Optimal tranSport-based suBmOdular tRaNsferability metric (OSBORN) to estimate the transferability of an ensemble of models to a downstream task. OSBORN collectively accounts for image domain difference, task difference, and cohesiveness of models in the ensemble to provide reliable estimates of transferability. We gauge the performance of OSBORN on both image classification and semantic segmentation tasks. Our setup includes 28 source datasets, 11 target datasets, 5 model architectures, and 2 pre-training methods. We benchmark our method against current state-of-the-art metrics MS-LEEP and E-LEEP, and outperform them consistently using the proposed approach.

Task Objective

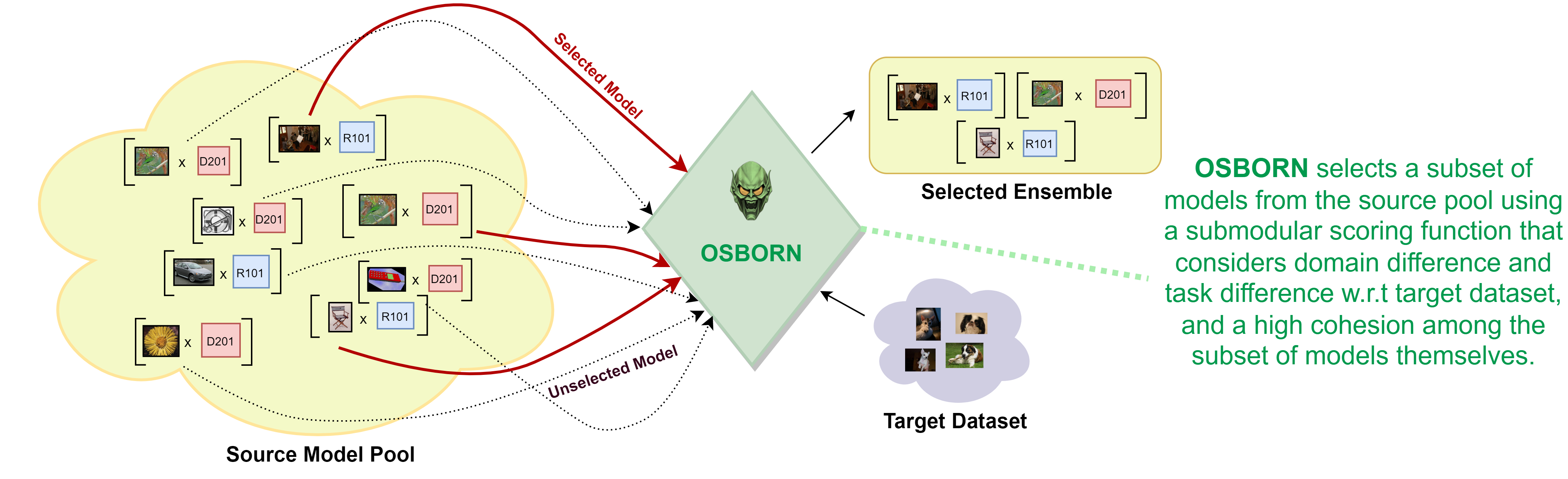

Given a source pool containing M datasets accompanied by a pre-trained model each and a target dataset, OSBORN selects a subset of models i.e., an Ensemble such that the Domain Difference, Task Difference are low and the Model Cohesion is high by employing a submodular approach. Please refer to Section 1 of the paper for more details.

Results

Image Classification using fully-supervised learning

Table 3: Results for Image Classification using fully-supervised learning.

Image Classification using self-supervised learning

Table 4: Results for Image Classification using self-supervised learning.

Related Links

BibTeX

@article{park2021nerfies,

author = {placeholder},

title = {placeholder},

journal = {ICCV},

year = {2023},

}